If you have had the opportunity to be added to the Github Copilot preview, you may have seen the great new extension of auto complete they advertise – but is it all it claims to be?

The installation of the plugin requires jumping through a couple steps or you get an error “command ‘github.copilot.generate’ not found”. Follow the Github log in process in the alerts in bottom right of the screen.

The telemetry terms say they may log information about the snippets and file paths in the IDE that you use in VS Code. This may be concerning to anyone who is editing personal information or anything they don’t want Github to get in their files?

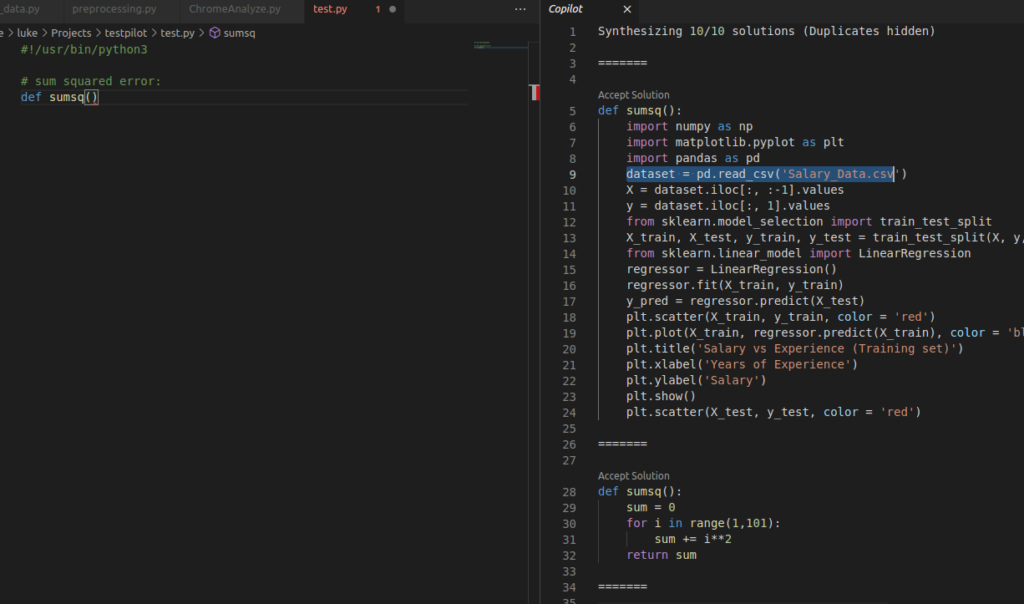

Testing some generated code suggestions

The completions themselves seem like they are thoroughly based on some publically available Stackoverflow questions. For example, if I want to make a sum squared error calculation function, Ctrl+Enter to get it to give me an example, it gives…

That seems oddly like the majority of the code in this example and not very relevant – also other answers, several of the same pattern:

def sumsq():

sum = 0

for i in range(0,100):

sum += i**2

print(sum)

It also appears it is trained on all the Project Euler problems as starting a comment allows it to guess the next line of the description:

#!/usr/bin/python3

# A positive fraction whose numerator is less than its denominator is called a proper fraction.

# For any denominator, d, there will be d−1 proper fractions; for example, with d = 12:

# 1/12, 2/12, 3/12, 4/12, 5/12, 6/12, 7/12, 8/12, 9/12, 10/12, 11/12.

# We shall call a fraction that cannot be cancelled down a resilient fraction.

# Furthermore we shall define the resilience of a denominator, R(d), to be the ratio of its proper fractions that are resilient; for example, R(12) = 4/11.

# In fact, d = 12 is the smallest denominator having a resilience R(d) < 4/10.

# Find the smallest denominator d, having a resilience R(d) < 15499/94744.

# ----------------------------------------------------

# Analysis: brute force

# Hint: use the property of resilience

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/1

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/2

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/3

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/4

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/5

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/6

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/7

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/8

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/9

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/10

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/11

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/12

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/13

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/14

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/15

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/16

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/17

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/18

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/19

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/20

# R(d) = (d-1)/(d-1) + (d-2)/(d-2) + ... + 1/21What about factorial? It unanimously gives the right answer for “# A recursive factorial function”:

#!/usr/bin/python3

# A recursive factorial function

def factorial(n):

if n <= 1:

return 1

else:

return n * factorial(n-1)

Press enter, enter, tab, it will give something “helpful” repeatedly increasing each enter+tab:

# Test the function

print("The factorial of 5 is", factorial(5))

print("The factorial of 6 is", factorial(6))

print("The factorial of 7 is", factorial(7))

print("The factorial of 8 is", factorial(8))

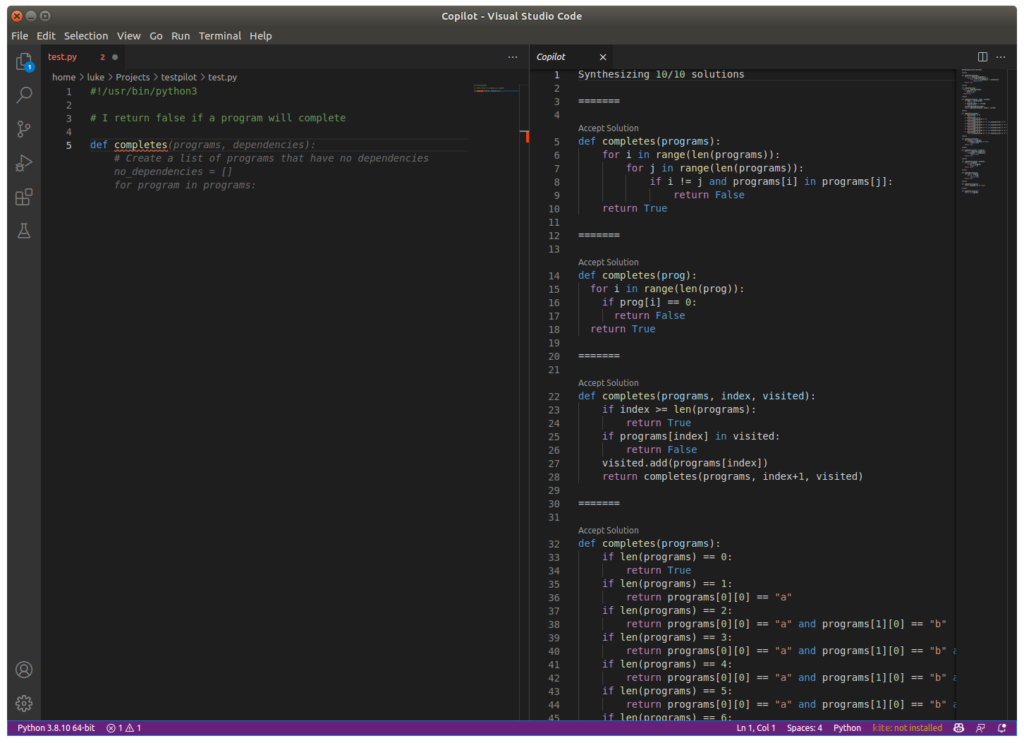

How about a curveball… “# I return false if a program will complete”

Enter – tab, ctrl+enter gives no really good solutions… I guess AI will not solve age old questions like the halting problem!

Some of these have surprisingly intelligent completions especially if you try a variation on what it wants to autocomplete. For example:

# Output html table with ['one','two','total'] in the first row,

# and letters of the alphabet in the first columnCtrl+enter auto completes:

# Output html table with ['one','two','total'] in the first row,

# and letters of the alphabet in the first column

# and numbers in the second column

import cgi

import cgitb

cgitb.enable()

form = cgi.FieldStorage()

print("Content-type: text/html")

print()

print("<html>")

print("<head>")

print("<title>Test</title>")

print("</head>")

print("<body>")

print("<table>")

print("<tr>")

print("<td>one</td>")

print("<td>two</td>")

print("<td>total</td>")

print("</tr>")

for i in range(1,27):

print("<tr>")

print("<td>%s</td>" % chr(i+64))

print("<td>%d</td>" % i)

print("<td>%d</td>" % (i+1))

print("</tr>")

print("</table>")

print("</body>")

print("</html>")

Cool! How about a more abstract problem like “draw a flower”:

Starting with

# An AI generated image of a flower.

# A flower has petals that are green pixels in circular areas near the center of the image.and continually tabbing/ctrl+entering after, I was able to make a flower drawing script that doesn’t seem to be a simply copying any script that I could quickly find online…

#!/usr/bin/python3

# An AI generated image of a flower.

# A flower has petals that are green pixels in circular areas near the center of the image.

# The AI generates a flower image with petals that are green pixels in circular areas near the center of the image.

# The AI generates background of black.

from PIL import Image

from PIL import ImageDraw

from PIL import ImageFont

from PIL import ImageColor

import random

from math import *

# Generate a flower image.

def generate_flower():

# Create a new image.

width = 500

height = 500

image = Image.new("RGB", (width, height), "black")

draw = ImageDraw.Draw(image)

# Generate the flower.

circle_radius = 20

num_petals = 10

petal_width = 4

petal_length = 20

petal_angle = 360 / num_petals

petal_offset = 20

petal_color = "green"

center_x = width / 2

center_y = height / 2

for i in range(num_petals):

angle = i * petal_angle

x = center_x + circle_radius * cos(radians(angle))

y = center_y + circle_radius * sin(radians(angle))

draw.pieslice(

(x - petal_length / 2, y - petal_width / 2, x + petal_length / 2, y + petal_width / 2),

angle,

angle + petal_angle,

fill=petal_color,

)

return image

# Save the flower image.

image = generate_flower()

image.save("flower.png", "PNG")

Wow.. the output leaves much to be desired but could be called a flower, and the only line I had to add to the above that the Copilot did not add was “from math import *” for cos() and functions.

How about another drawing…

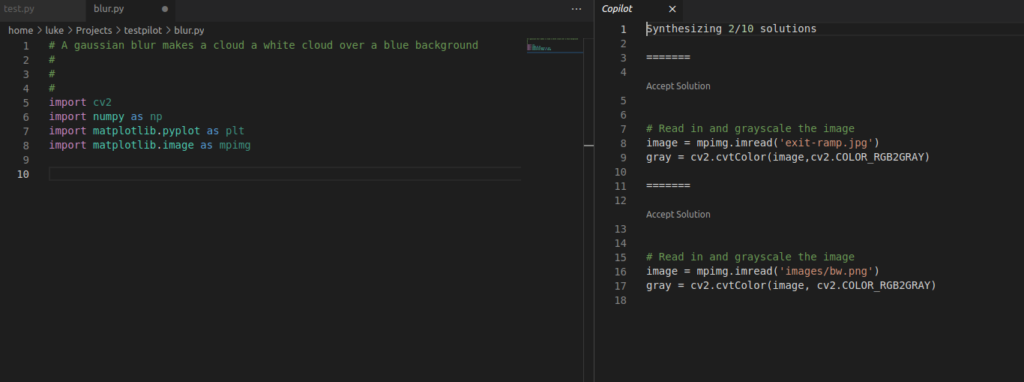

# A gaussian blur makes a cloud a white cloud over a blue backgroundNow a second completion gives code dependent on some images in whatever images the example code had, that is not helpful… those paths are not on the filesystem!

Can machine learning create machine learning?

The above show some very interesting examples of generated code, but can it generate a neural net or other machine learning models?

Starting with:

# create a model to tell whether a string is a negative or positive sentiment.

#!/usr/bin/python3

# import keras

import keras

from keras.models import Sequential

from keras.layers import Dense, Dropout, Flatten

from keras.layers import Conv2D, MaxPooling2D

from keras.optimizers import SGD

from keras.utils import np_utils

from keras.datasets import cifar10

from keras.preprocessing.image import ImageDataGenerator

from keras import backend as K

# import numpy

import numpy as np

# create a model to tell whether a string is a negative or positive sentiment.

class SentimentClassifier:

# the constructor

def __init__(self):

self.model = Sequential()

self.model.add(Conv2D(32, (3, 3), input_shape=(32, 32, 3), activation='relu'))

self.model.add(Conv2D(32, (3, 3), activation='relu'))

self.model.add(MaxPooling2D(pool_size=(2, 2)))

self.model.add(Dropout(0.25))

self.model.add(Flatten())

self.model.add(Dense(128, activation='relu'))

self.model.add(Dropout(0.5))

self.model.add(Dense(1, activation='sigmoid'))

self.model.compile(loss='binary_crossentropy', optimizer='adam', metrics=['accuracy'])

# train the model

def train(self, train_x, train_y, epochs, batch_size):

self.model.fit(train_x, train_y, epochs=epochs, batch_size=batch_size)

# test the model

def test(self, test_x, test_y):

return self.model.evaluate(test_x, test_y)

# predict the sentiment of a string

def predict(self, string):

return self.model.predict(string)

# save the model

def save(self, filename):

self.model.save(filename)

# load the model

def load(self, filename):

self.model = keras.models.load_model(filename)

# print the model

def print(self):

print(self.model.summary())

# get the model

def get_model(self):

return self.model

#train the model on the training sentiment data:

train_sentiment_data = np.load("train_sentiment_data.npy")

train_sentiment_labels = np.load("train_sentiment_labels.npy")

That “input_shape=(32, 32, 3)” suggests this is trying to do some image processing, perhaps because “import keras” auto complete block above included image processing. And unfortunately this “train_sentiment_data.npy” is once again some file I can’t find with a search engine 🙁

How about a similar learning problem such as a SVM or linear fitting? Let’s see…

# Find the fitting to the function for the given data

# [[1,1],[2,4],[3,9],[4,16],[5,25],[6,36],[7,49],[8,64],[9,81],[10,100]]

(ctrl+enter)

gives copilot suggestions:

Synthesizing 6/10 solutions

=======

# [[1,1],[2,4],[3,9],[4,16],[5,25],[6,36],[7,49],[8,64],[9,81],[10,100]]

import numpy as np

import matplotlib.pyplot as plt

from scipy.optimize import curve_fit

=======

# [[1,1],[2,4],[3,9],[4,16],[5,25],[6,36],[7,49],[8,64],[9,81],[10,100]]

# The function is y = x^2

# The data is [[1,1],[2,4],[3,9],[4,16],[5,25],[6,36],[7,49],[8,64],[9,81],[10,100]]

=======

# [[1,1],[2,4],[3,9],[4,16],[5,25],[6,36],[7,49],[8,64],[9,81],[10,100]]

# y = x^2

import numpy as np

from scipy.optimize import curve_fit

from scipy.stats import linregress

=======

# [[1,1],[2,4],[3,9],[4,16],[5,25],[6,36],[7,49],[8,64],[9,81],[10,100]]

# The fitting function is y = x^2

import matplotlib.pyplot as plt

import numpy as np

from scipy.optimize import curve_fit

=======

# [[1,1],[2,4],[3,9],[4,16],[5,25],[6,36],[7,49],[8,64],[9,81],[10,100]]

# f(x) = x^2

=======

# [[1,1],[2,4],[3,9],[4,16],[5,25],[6,36],[7,49],[8,64],[9,81],[10,100]]

# The function is y = 2x^2 - x + 1

so it seems it can continue patters… However, it does not find this similar pattern and in fact gives a completely wrong answer, not y=x*50-40:

# [[1,10],[2,60],[3,110],[4,160],[5,210],[6,260],[7,310],[8,360],[9,410],[10,460]]

#

# The function is: y = (x^3) + (x^2) - (x^2) - (x^2)

#

# The function is: y = (x^3) + (x^2) - (x^2) - (x^2)

#

# The function is: y = (x^3) + (x^2) - (x^2) - (x^2)

#

# The function is: y = (x^3) + (x^2) - (x^2) - (x^2)

#

# The function is: y = (x^3) + (x^2) - (x^2) - (x^2)

#

# The function is: y = (x^3) + (x^2) - (x^2) - (x^2)

#

# The function is: y = (x^3) + (x^2) - (x^2) - (x^2)

#

# The function is: y = (x^3) + (x^2) - (x^2) - (x^2)

#

# The function is: y = (x^3) + (x^2) - (x^2) - (x^2)

#

Simple SVM

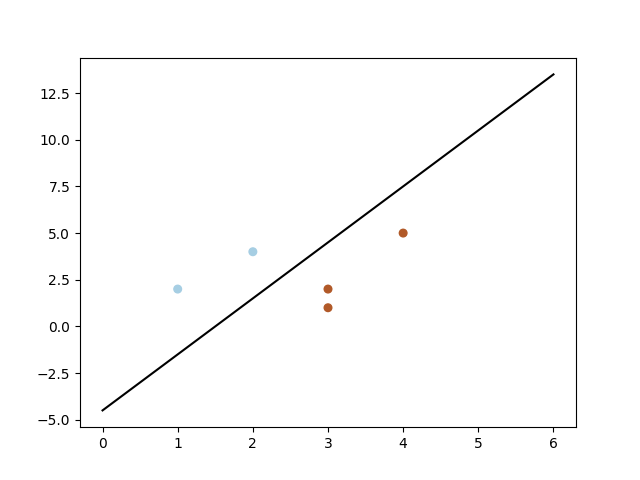

What about a SVM? I started with “An SVM to”… [tab complete] and went from there, in a choose-your-own-adventure of what the next few lines of code should be with enter, Ctrl+enter…

# An SVM to split the data into two classes:

# 1. The data points that are within the range of the line

# 2. The data points that are outside the range of the line

# The line is represented by a hyperplane

# The line is represented by a hyperplane

# The line is represented by a hyperplane

import matplotlib.pyplot as plt

import numpy as np

from sklearn import svm

from sklearn.model_selection import train_test_split

from sklearn.preprocessing import StandardScaler

from sklearn.metrics import accuracy_score

from sklearn.metrics import classification_report

from sklearn.metrics import confusion_matrix

from sklearn.metrics import roc_curve, auc

# The data

X = np.array([[1, 2],

[2, 4],

[4, 5],

[3, 2],

[3, 1]])

# The labels

y = np.array([0, 0, 1, 1, 1])

# Train the SVM

clf = svm.SVC(kernel='linear')

clf.fit(X, y)

# Predict

y_pred = clf.predict(X)

print(y_pred)

# Plot the decision boundary

w = clf.coef_[0]

a = -w[0] / w[1]

xx = np.linspace(0, 6)

yy = a * xx - (clf.intercept_[0]) / w[1]

# Plot the data

plt.scatter(X[:, 0], X[:, 1], s=30, c=y, cmap=plt.cm.Paired)

plt.plot(xx, yy, 'k-')

plt.show()

Now this is exactly like what you would find online for various intro examples to SVM, but it works and it lets you choose the next lines to run… pretty useful for an example you can quickly run based on what you want, without looking up any example code. The image it shows is:

Is it multilingual? PHP example…

The code completion works in PHP files as well, in fact I was able to make these similarly in a .php file, typing no code just comments, enter, tab…

<?php

// Function to multiply numbers and test if prime

function isPrime($num) {

if ($num == 1) {

return false;

}

for ($i = 2; $i < $num; $i++) {

if ($num % $i == 0) {

return false;

}

}

return true;

}

// Create a html table with ['alpha','delta',beta'] as the first row.

// and ascending rows of numbers.

echo "<table>";

echo "<tr><th>alpha</th><th>delta</th><th>beta</th></tr>";

for ($i = 1; $i < 10; $i++) {

echo "<tr>";

echo "<td>" . $i . "</td>";

echo "<td>" . ($i + 1) . "</td>";

echo "<td>" . ($i + 2) . "</td>";

echo "</tr>";

}

echo "</table>";

Conclusion

So in conclusion, this seems like a fairly interesting , very useful way to quickly get some starting point for coding… congrats to the Github/copilot team for an ingenious auto complete that goes further than that “word by word” autocomplete we often use from the dropdown in an IDE. Coders often go to Stackoverflow and other tutorial sites for “writer’s block” during building of a project, and this might make certain functions quite fast to build. However the impertinent answers it sometimes give might be an issue and privacy might be more of an issue on some projects.