Human pose estimation is something useful for robotics/programming as you can see what position a person is in a picture. For last weekend’s Hackrithmitic I did an experiment for fun using computer vision pose estimation. To start with I found several possibilities with available libraries:

- Tensorflow js has been used to say, don’t touch your face, but it takes a massive amount of cpu.

- Openpose is a popular one, only licensed for noncommercial research use, and there is a Opencv example for it that doesn’t quite show how to use it.

- AlphaPose is supposedly faster and has a more clear license and possibility for commercial use – if you want that as a possibility. I checked out the install instructions and worked but for “python3” instead of “python”. It also misses obvious step of installing cuda for your Nvidia system before running.

- GluonCV is another, which seems more user friendly. This one I was able to get running in a few minutes with their example:

As in their documentation, the cv example based on what machine you have, should be installed. For my Ubuntu-18.04 (with the system nvidia-cuda-toolkit 9.1.85) this was:

pip3 install --upgrade mxnet-cu91mkl

pip3 install --upgrade gluoncvUnlike others their examples are easy to quickly get up and running. In fact running the example here downloads and sets up a working example.

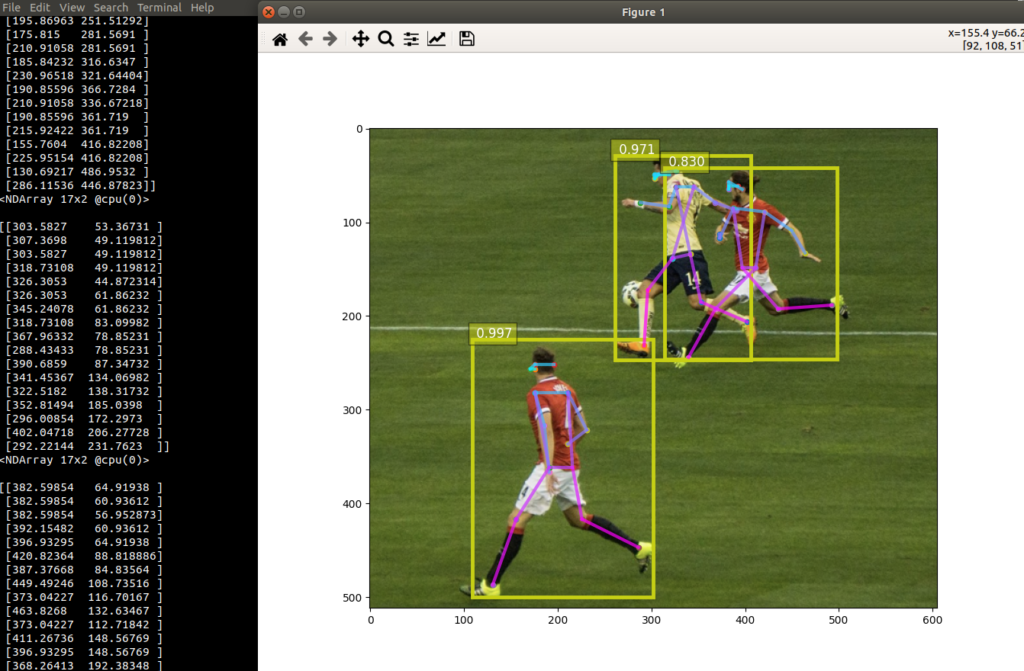

Disassembling result array, adding some math to the example code

Let’s say we want to use each of these points in a calculation – first let’s see what we have that it outputs, adding this below the example’s pred_coords line:

predicted_heatmap = pose_net(pose_input)

pred_coords, confidence = heatmap_to_coord(predicted_heatmap, upscale_bbox)

for person in (pred_coords):

print( person )

Now I can see each of those pred_coords is apparently a numpy array with various parts – the first one being a head piece, looking at the matplotlib view and hovering the marked coordinates:

Cool, and looking at the data points as compared to the points zooming in on the Matplotlib figure, it appears the first 5 are coordinates on the head, the last two are the feet points. The 11th and 10th items being the hands – we can separate these and print out and verify we can get these:

for person in (pred_coords):

print( person )

print(person[0])

hand1 = person[9]

hand2 = person[10]

leg1 = person[15]

leg2 = person[16]

print('feet:')

print(leg1)

print(leg2)

print('hands:')

print(hand1)

print(hand2)

Cool – now let’s do some basic math to see who is touching their face – or close to it. First we need to be able to do some basic math distances – in Numpy the euclidian distance command is the l2 norm in linear algebra-speak, and we can verify this seems to do what we want in python console:

$ python3

Python 3.6.9 (default, Jul 17 2020, 12:50:27)

[GCC 8.4.0] on linux

Type "help", "copyright", "credits" or "license" for more information.

import numpy as np

np.array

np.array([1,2])

array([1, 2])

np.array([1,2]) - np.array([1,3])

array([ 0, -1])

np.linalg.norm(np.array([1,2]) - np.array([1,3]))

1.0

np.linalg.norm(np.array([100,100]) - np.array([101,101]))

1.4142135623730951

np.linalg.norm(np.array([100,100]) - np.array([105,101]))

5.0990195135927845Now there was unfortunately an odd type of array it gives with this library, so to get the one number that is the result distance I had to use .item().asnumpy().item():

def dist(np1, np2):

return np.linalg.norm(np1-np2).item().asnumpy().item()

It makes since to make a dist function to get that distance, that would be very unwieldy to possibly change it if we found something wrong with the distance calculation. Then let’s compare distance of head to head point with head to end of arm – does the detected person have hand near their head, in other words?

head1 = person[0]

headdist = dist(head1,person[1])

for i in range(4):

d = dist(head1,person[i])

if d > headdist:

headdist = d

print('headdist about %s' % (headdist,))

#Is hand anywhere near head points - within one head distance in pixels, near any head point?

close = False

THRESHOLD = 2 # relative distance to consider it touching, within.

for i in range(4):

#print('handdist %s' % (dist(hand1,person[i]),) )

#print('handdist %s' % (dist(hand2,person[i]),) )

if dist(hand1,person[i]) < headdist*THRESHOLD:

close = True

if dist(hand2,person[i]) < headdist*THRESHOLD:

close = True

if close:

print("Person touching face DONT TOUCH YOUR FACE")

else:

print("Person not touching face")

Full code is available here and a quick demo shows the results with several different types of photo:

Upgrade Update

Suppose you update your drivers or update Ubuntu 20.04 or whatever is latest – Synaptic should help you track down the latest Nvidia drivers supported.

sudo apt install libnvrtc10.1 libcufft10 libcurand10 libcusolver10 libcusolvermg10 libcusparse10 libcublaslt10 libcublas10 libcudart10.1 libnvidia-compute-460-serverNow it should work with the latest library as linked –

pip3 install --upgrade mxnet-cu101mklNote “cu101” corresponds to that CUDA 10.1 as installed, otherwise you will get an error when running it.