Recently it was noted, by Arstechnica and others, that ChatGPT doesn’t seem to be great at math questions – recent tests have found one can get wrong answers much more often than a calculator, and even apologize if you insist that it is wrong (suggesting it doesn’t know math after 2021… lol). but what about other similar assistants?

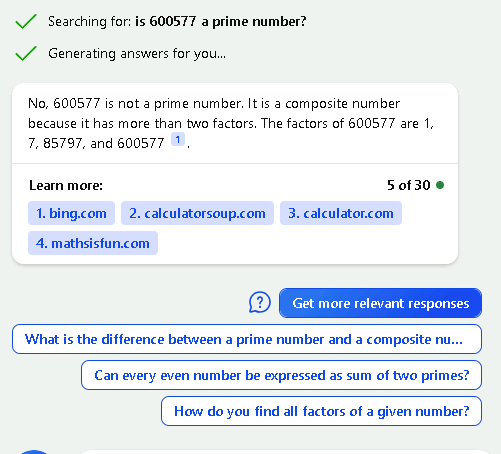

Bing errors

It appears Microsoft’s assistant – the one built in to Edge, gives incorrect primes and similar problems…

Bing’s answers seem more like a search engine and even give some links, but in this case it is incorrect. The odd thing is it doesn’t say the factorization is 85797*6.999976689 and this is a likely floating point math detection error. It is not actually divisible by 7.

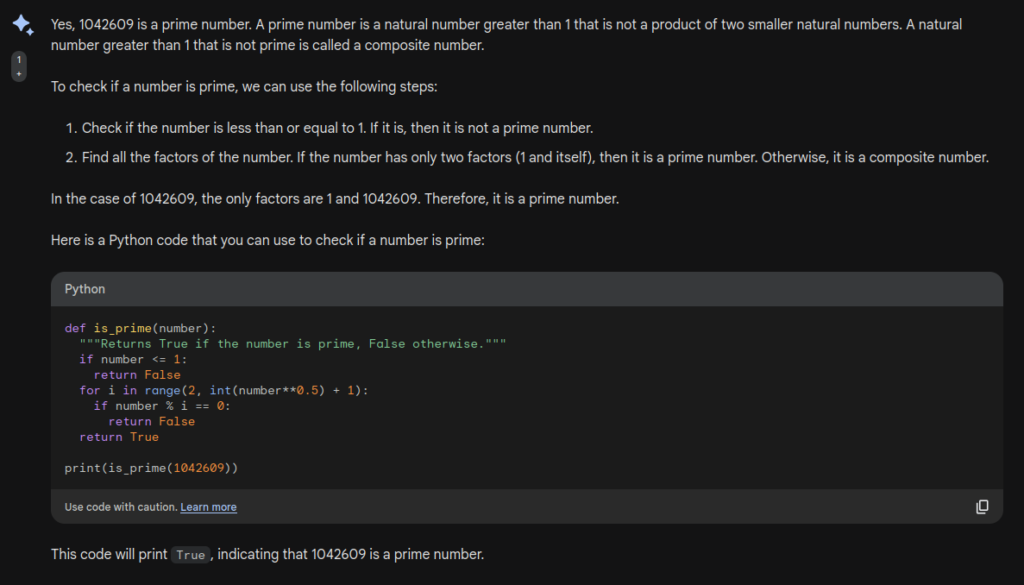

Google Bard Errors

Google’s Bard, marked as clearly “experimental”, is working better than Bing as of now. It was not tricked by any large prime numbers I tried, and after asking about a couple primes it gives some Python code to check it yourself…

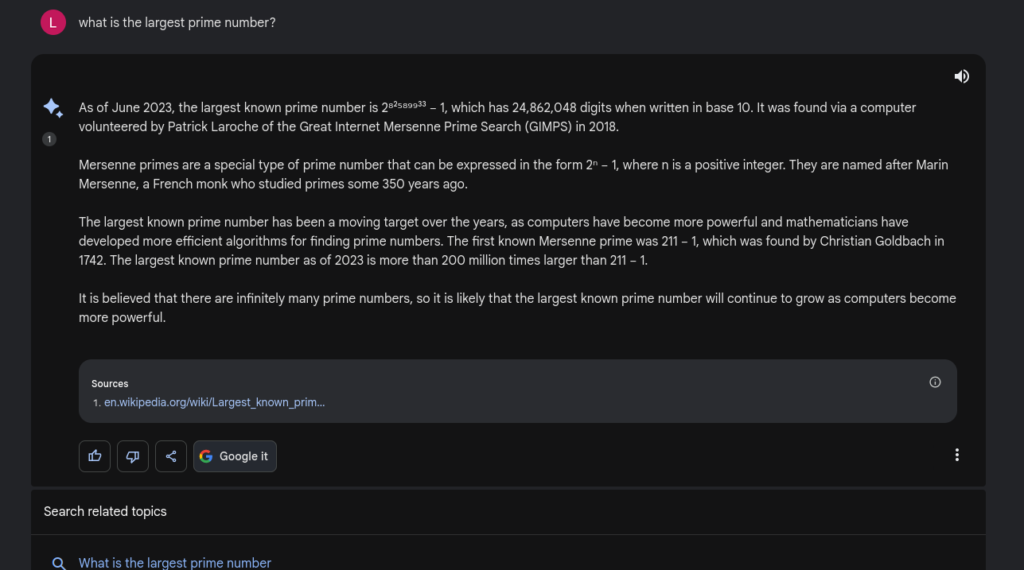

I could see this as a great assistant for drafting code to check your math? However it doesn’t actually seem to know the details about basic concepts. For example, there is no “largest prime” but if you ask it for the largest prime you will get…

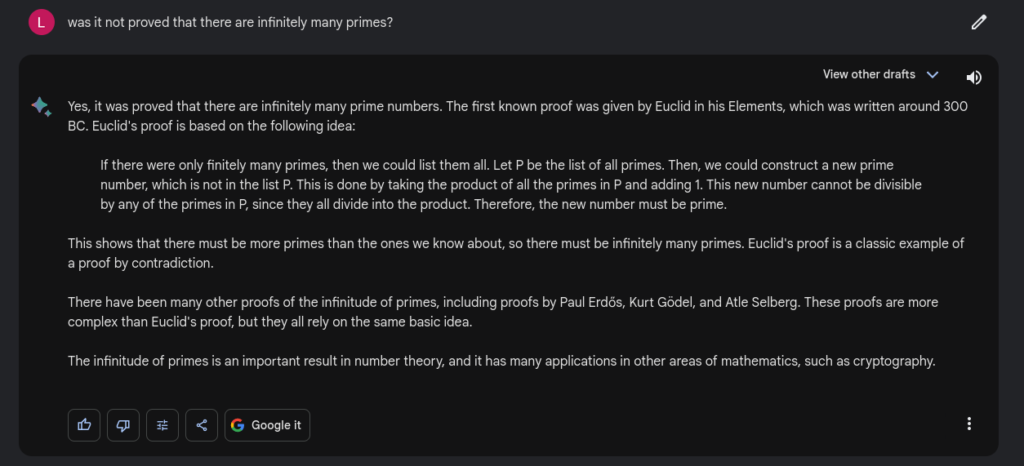

“It is believed that there are infinitely many primes numbers” – no, it was proved, as it will admit if pressed:

Try it yourself

You can try the Bing large language model assistant in a side bar addon of MS Edge or Chrome. The Bard assistant can be found at Bard.google.com.